MoXI (Model eXchange Interlingua): An Intermediate Language to Spur Reproducible and Comparable Model Checking Research

Resource Links

Home: https://modelchecker.temporallogic.org

GitHub Organization: https://modelchecker.github.io/

MoXI Language Definition:

https://github.com/ModelChecker/IL/blob/main/description.md

CAV 2024 Workshop: OSSyM: https://laboratory.temporallogic.org/ossym/

FMCAD 2023 Workshop:

https://github.com/ModelChecker/FMCAD23-Tutorial

SPIN 2024 paper: MoXI semantics:

https://research.temporallogic.org/papers/SPIN2024.pdf

CAV 2024 tool paper: MoXI translators:

https://research.temporallogic.org/papers/CAV2024.pdf

artifact: https://zenodo.org/records/10946779

Model Checkers That Natively Use MoXI

MoXIChecker:

by LMU Munich and National Taiwan University

https://gitlab.com/sosy-lab/software/moxichecker

Kind2 (KMoXI)

by University of Iowa

https://github.com/kind2-mc/kind2 (with make kmoxi)

Black (Bounded Ltl sAtisfiability cheCKer)

by University of Bozen-Bolzano, Italy

https://www.black-sat.org

Pono

by Stanford

https://github.com/stanford-centaur/pono

Sally

by SRI

(MoXI encoding coming soon)

https://sri-csl.github.io/sally/

Formally: the open-source formal methods toolchain

by the open-source research community and University of Bozen-Bolzano

https://www.formally.fm/

Supported by NSF Project: Developing an Open-Source, State-of-the-Art Symbolic Model-Checking Framework for the Model-Checking Research Community

Abstract

Safety-critical and security-critical systems are entering our lives at an increasingly rapid pace. These are the systems that help fly our planes, drive our cars, deliver our packages, ensure our electricity, or even automate our homes. Especially when humans cannot perform a task in person, e.g., due to a dangerous working environment, we depend on such systems. Before any safety-critical system launches into the human environment, we need to be sure it is really safe. Model checking is a popular and appealing way to rigorously check for safety: given a system, or an accurate model of the system, and a safety requirement, model checking is a “push button” technique to produce either a proof that the system always operates safely, or a counterexample detailing a system execution that violates the safety requirement. Many aspects of model checking are active research areas, including more efficient ways of reasoning about the system’s behavior space, and faster search algorithms for the proofs and counterexamples.

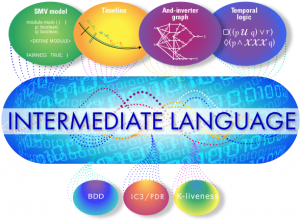

As model checking becomes more integrated into the standard design and verification process for safety-critical systems, the platforms for model checking research have become more limited. Previous options have become closed-source or industry tools; current research platforms don’t have support for expressive specification languages needed for verifying real systems. Our goal is to fill the current gap in model checking research platforms: building a freely-available, open-source, scalable model checking infrastructure that accepts expressive models and efficiently interfaces with the currently-maintained state-of-the-art back-end algorithms to provide an extensible research and verification tool. We will create a community resource with a well-documented intermediate representation to enable extensibility, and a web portal, facilitating new modeling languages and back-end algorithmic advances. To add new modeling languages or algorithms, researchers need only to develop a translator to/from the new intermediate language, and will then be able to integrate each advance with the full state-of-the-art in model checking. This community infrastructure will be ideal for catapulting formal verification efforts in many cutting-edge application areas, including security, networking, and operating system verification. We particularly target outreach to the embedded systems (CPS) community as our new framework will make hardware verification problems from this community more accessible.

Slides: why are we doing this?

Slides: Draft Details of a Model Checking Intermediate Language (Updated 1/2023)

Send your comments